What is a convolutional neural network?

A convolutional neural network (CNN) is a type of deep artificial intelligence neural network used in image processing [1]. The network takes an input image and using a catalogue of existing images produces an output that matches the input image. A neural network draws on similarities from interconnected biological neurons and is structured in such a way to learn and improve on its performance, based on how many images the CNN ‘sees’ and the amount of convolutions (combined inputs of two images, a new one and an existing catalogued image, to create a third output) the CNN generates.

CNNs are seen as a beneficial new tool for dermatologists to help better diagnose lesions. The work that a CNN does to produce a diagnostic result from an image is similar to how a dermatologist uses their training and knowledge: diagnosing lesions by a dermatologist generally involves an input image (of a cutaneous lesion) being fed through a processing network (the skills and knowledge of the dermatologist who analyses it and synthesises available information) to output a ‘class’ (or diagnosis) or a ‘probability of classes’ (differential diagnosis) [2].

The visual nature of dermatology lends itself well to digital lesion imaging, and CNNs have huge potential to change practice. It is a multifaceted means of analysing data that involves complex mathematics and requires massive computational power to combine biology, mathematics and computer science.

Who uses convolutional neural networks?

CNNs have been used in military and civil applications, including unmanned aerial vehicles, the technology sector, and commerce [3]. They are found in everyday applications such as social media platforms that automatically recognise faces, in self-tagging photo galleries, and on shopping websites that come up with suggestions based on your internet browsing habits.

In the medical field, researchers have been using CNNs to diagnose diabetic eye disease, arrhythmias, and skin cancers [3–5].

Tell me more about convolutional neural networks

The basis of a CNN is a computer method that is able to differentiate between different image classes based on unique features that can reliably be used to identify the image class, such as edges and curves. This base is then expanded upon with more abstract features being added through a series of convolutional pooling and output layers.

Step 1: Convolutional layers

An image is fed into a computer and processed as different arrangements of pixels (dots), based on their colour. This process converts sections of the image according to a particular filter [2]. The filters often start off analysing simple features like straight lines, diagonal lines, curved lines, or dots. Every time a filter is passed over the original image, it creates a new, smaller version of the original photograph. The positive filter matches are assigned a positive value, and the areas that do not match are assigned a value of less than 1. This results in a convoluted image; for example, a straight-line filter passed over an image of an acral naevus with a parallel furrow pattern on dermoscopy will show a strong positive convolution image. This step can be repeated with further features to achieve a more accurate output (ie, a diagnosis or differential diagnosis).

Step 2: Pooling layer

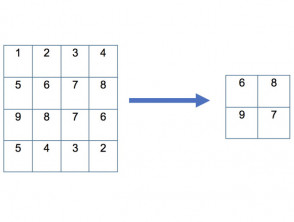

If the resulting image is large, the layers of the neural networks may need to be “pooled” between subsequent convolutions, fixing on an area of interest in the image and removing the parameters around that area [2]. There are different types of pooling, but the most common type used is the maximum pooling method (see figure below).

A simple “maximum pooling” method of a 4 x 4 image into a pool of 2 x 2

There is often an additional normalisation step, which is a common technique for improving the performance and stability of a neural network. This works by standardising the inputs analysed by the neural network, in order to ensure each input has roughly the same scale. Thus, the neural network will not assign undue significance to one input filter over another simple due to a difference in scale. This process vastly increases the learning rate of a neural network.

Step 3: Output layer

To generate an output differential diagnosis for a suspected lesion, the neutral network needs to apply a fully connected layer based on all the layers it has previously processed. This is akin to a dermatologist synthesising the different clues into a provisional diagnosis with a set of differentials.

The CNN can now be trained using additional functions to improve accuracy and to "teach" itself to identify new lesions (such as back-propagation, which teaches the network when it selects a wrong outcome to change the weight assigned to traits when selecting an output class) [1].

What are the benefits of convolutional neural networks?

The benefits of CNNs in the diagnosis of skin lesions include accuracy, speed, and low cost.

- The clinical diagnostic accuracy for melanoma is dependent on the experience and training of the examining doctor; CNNs have been able to perform as well as board-certified dermatologists in limited circumstances, and their accuracy will continue to improve in the future [6,7].

- CNNs currently take seconds to minutes to arrive at diagnosis when confronted with an image of a skin lesion. The inputs, algorithms, and outputs can be undertaken outside office hours and can be accessible to anyone with access to the internet. Compare this short time with the wait and travel times associated with a dermatologist appointment, which is often several months in the future or longer.

- The algorithms can be adaptive and they can learn from adding new images over time.

- CNNs are predicted to be able to diagnose lesions for a fraction of the cost of a visit to a dermatologist.

What are the disadvantages of convolutional neural networks?

Caveats about the use of CNNs include the unrealistic expectations of patients and health practitioners, security and privacy issues, and medicolegal accountability.

- There is a substantial amount of excitement surrounding CNN technology, but the advantages will take time to materialise. Massive amounts of data and input are required to 'train' CNNs. Humans are needed to choose which lesions should be imaged and examined by the CNN, and these participating health professionals also need to be trained.

- CNNs and any tools offering diagnostic support will need to be officially approved as medical devices, and then re-approved as their algorithms expand [8].

- CNNs will likely be entirely online, using cloud-based storage, and will need to have excellent cybersecurity systems to ensure backup in case of database or server failure, and authentication processes to prevent unauthorised access. (Encryption and secure transfer protocols are required to store personal health data, and research must only use anonymised data).

- Health professionals using CNN's will need to understand that performance using one data set is not necessarily applicable to another one; there will be incorrect diagnoses including false positives (overdiagnosis of benign lesions as malignant) and false negatives (eg, missed diagnoses of cancer).

- The medicolegal accountability for the health professional relying on CNNs requires clarification, as there is no notable precedence. Can a computer algorithm be held accountable for a wrong diagnosis or a missed diagnosis?